Why Your SaaS Needs a Docker Compose Setup Even If You’re Just One Person

Because the only thing worse than debugging production is debugging your local machine.

If you’re building a SaaS solo, the biggest productivity killer isn’t writing code — it’s setting up your damn environment.

You know the story:

You clone your repo on a new laptop or spin up a new dev box, run flask run or uvicorn main:app --reload, and boom — connection refused on localhost:5432.

Postgres isn’t running.

Your .env file is half missing.

Supabase changed a port.

And now you’re googling “how to reset a Postgres user password” for the third time this month.

That’s why I’ve stopped messing around with manual setups — and started containerizing my local environment using Docker Compose.

Not because it’s trendy.

Because it’s the only way to guarantee I can pull, build, and run my app in under a minute.

The indie dev reality

As solo devs, we move fast. We don’t have infra teams or onboarding docs. Most of our systems live in muscle memory and terminal history.

That’s fine when you’re in the groove — until you need to:

Revisit a project after a few months.

Share it with a collaborator.

Spin it up on a new machine.

Or just fix a quick bug and realize nothing runs anymore.

A solid local setup is like documentation that actually works.

Docker Compose is the simplest way to get there.

Why Docker Compose?

It’s not about “microservices” or “container orchestration.” Ignore that stuff.

Compose is just a YAML file that says:

“Here’s everything my app needs — run it all together.”

You can define your web app, Postgres, and even Supabase’s local stack if you want to mirror production closely.

When you run docker compose up, everything spins up consistently — same versions, same ports, same config — every time.

It’s reproducibility for humans.

The minimal example (Python + Postgres)

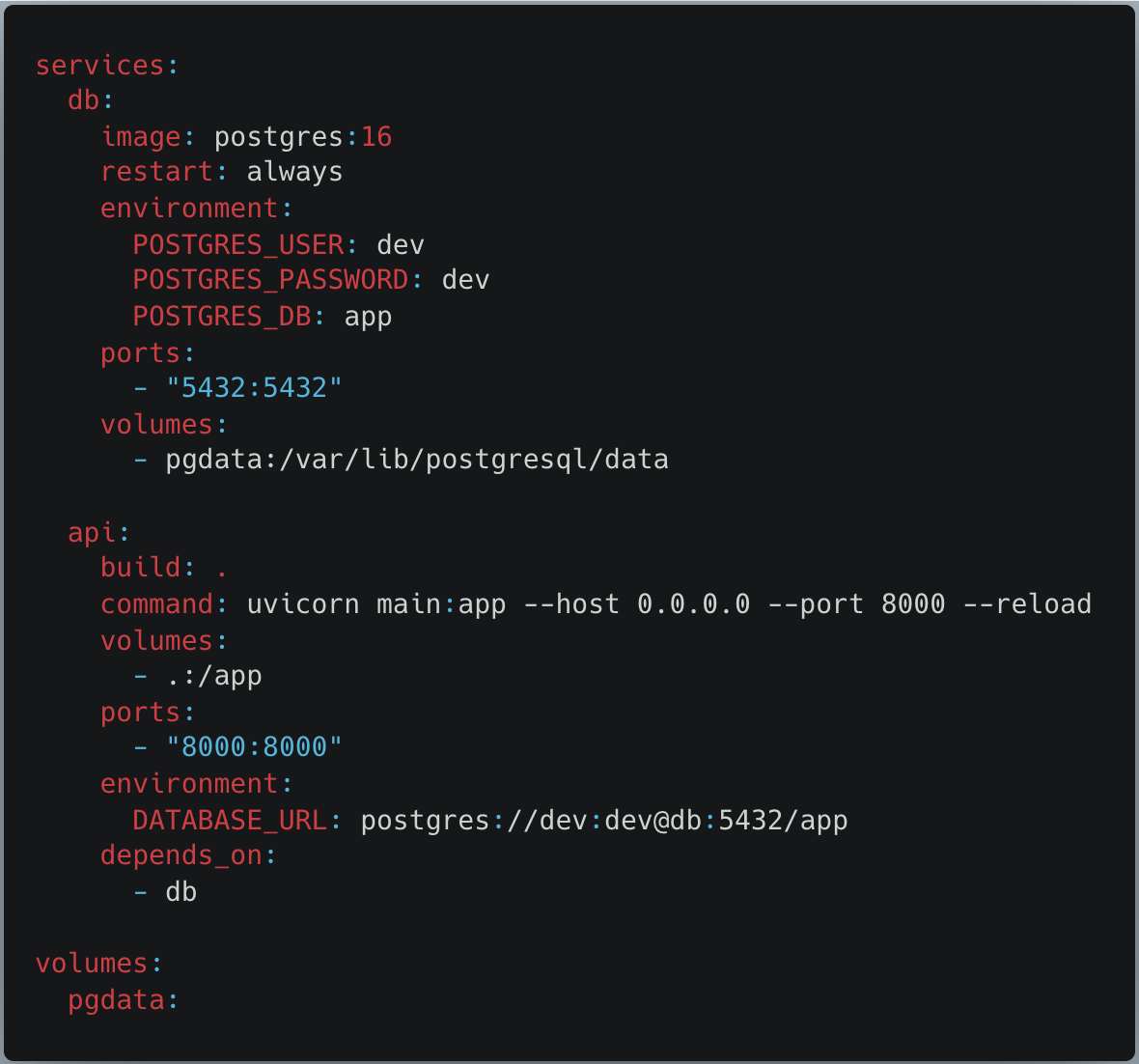

Let’s say you’re building a FastAPI app that talks to Postgres.

Here’s a dead-simple docker-compose.yml to make your life easier:

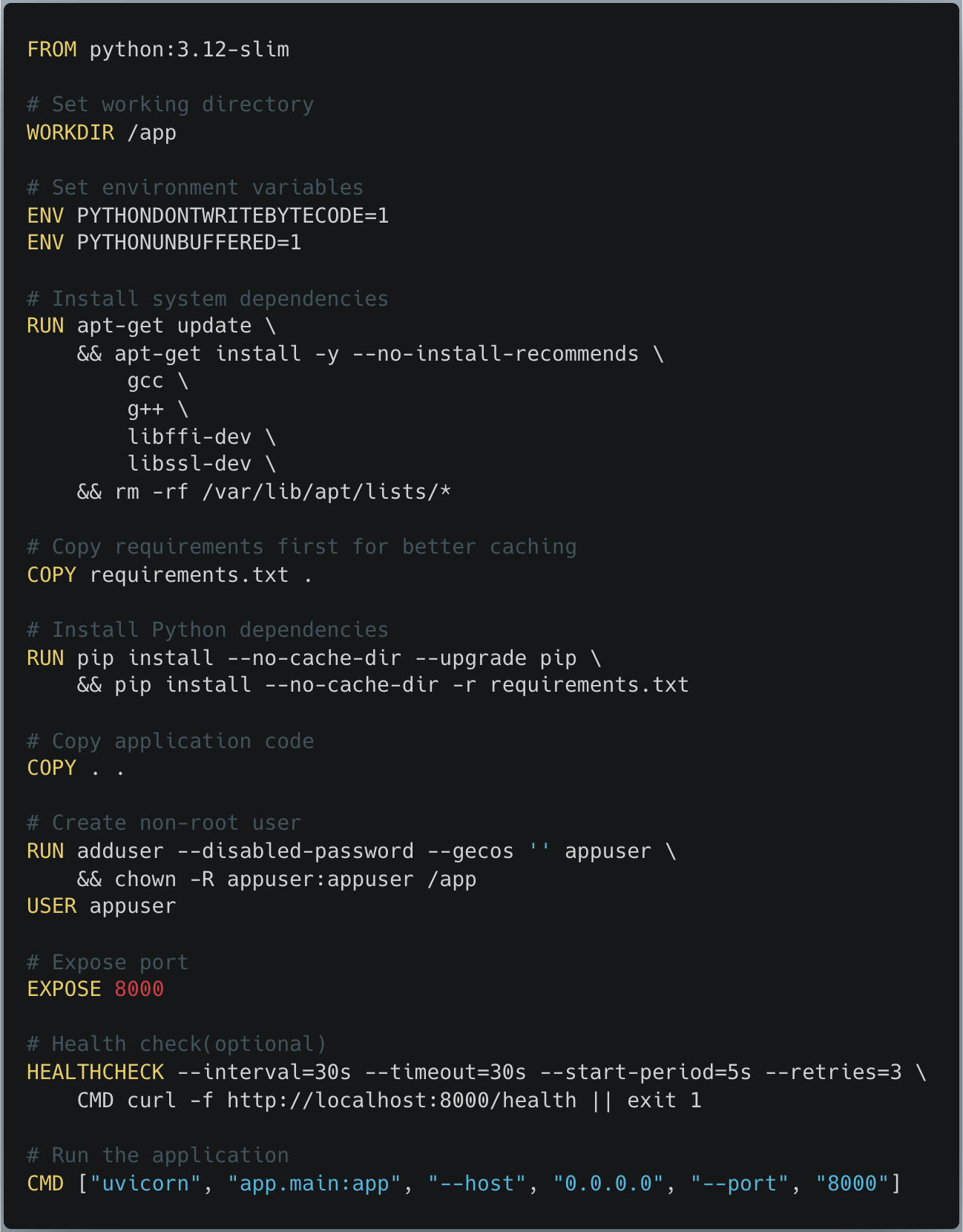

And a Dockerfile to go along with it.

That’s it.

Now you can run your entire stack with one command:

docker compose up

Your Python app connects to Postgres instantly.

No need to brew install, no weird port conflicts, no “is Postgres running?” guessing game.

Want to mirror Supabase locally?

If you’re using Supabase in production but want to run locally, Supabase has its own CLI that uses Docker under the hood.

You can spin up a near-production clone with:

supabase start

That’ll run Postgres, API, auth, and storage locally in containers — no manual setup required.

It’s heavier, but it’s great if you’re testing row-level security, triggers, or anything that depends on Supabase’s stack.

But isn’t Docker heavy?

Yeah, a little.

The first time you pull images, it’ll download a few hundred MB. After that, it’s fast.

And honestly, the alternative is worse — debugging inconsistent environments and broken local databases.

The real magic isn’t that it’s fast — it’s that it’s reliable.

If you take a break from your project for a month, you can come back and it’ll just work.

That’s worth the disk space.

Bonus: the same setup works for production

Here’s the underrated part — once you have this docker-compose.yml, you’re halfway to a production deployment.

You can:

Build your app image with docker compose build api.

Push it to a registry like Docker Hub or GitHub Container Registry.

Deploy it to Fly.io, Render, Railway, or your VPS — all of which happily accept a pre-built Docker image.

That means your local setup = production setup.

No “works on my machine,” no separate Heroku config, no hand-tuned server differences.

You’re testing exactly what you’ll ship.

For example, to build your image for deployment:

docker compose build api

docker tag yourapp_api your-registry.com/yourapp:latest

docker push your-registry.com/yourapp:latest

Then you can run it anywhere with:

docker run -p 8000:8000 your-registry.com/yourapp:latest

This alignment — same Dockerfile, same Compose config — is what makes deployment predictable, even as a one-person team.

Quality-of-life improvements

Once you’ve got Compose running smoothly, you can make it even nicer:

1. Add a Makefile or script for one-command startup:

make up

Your Makefile contents:

up:

docker compose up --build

2. Add a seed script for your DB:

docker compose exec db psql -U dev -d app -f seeds.sql

3. Run tests in the same containers:

docker compose run api pytest

You now have a full, consistent local dev environment that feels like production, without the cloud bill.

The point isn’t Docker — it’s repeatability

You’re not doing this to “learn containers.”

You’re doing it because your time is too valuable to waste on setup chores.

A docker-compose.yml file is the indie dev version of a safety net.

You can drop your laptop, clone your repo on a new one, and be productive in 60 seconds flat.

And when it’s time to deploy?

You’re already 90% there.

TL;DR

Your SaaS deserves a repeatable local setup.

Docker Compose makes it dead simple for Python + Postgres (and Supabase).

It doubles as your build foundation for production images.

You’ll thank yourself every time you reopen an old project or deploy something new.

It’s one of those rare decisions that’s both practical and future-proof.

Set it up once — and your dev-to-prod pipeline just became a lot less fragile.